A local AI for your own documents can be really useful: Your own chatbot reads all important documents once and then provides the right answers to questions such as:

"What is the excess for my car insurance?" or

"Does my supplementary dental insurance also cover inlays?"If you are a fan of board games, you can hand over all the game instructions to the AI and ask the chatbot questions such as:

"Where can I place tiles in Qwirkle?" We have tested how well this works on typical home PCs.

See also: 4 free AI chatbots you can run directly on your PC

Requirements

To be able to query your own documents with a completely local artificial intelligence, you essentially need three things: a local AI model, a database containing your documents, and a chatbot.

These three elements are provided by AI tools such as Anything LLM and Msty. Both programs are free of charge.

Install the tools on a PC with at least 8GB of RAM and a CPU that is as up-to-date as possible. There should be 5GB or more free space on the SSD.

Ideally, you should have a powerful graphics card from Nvidia or AMD. This overview of compatible models can help you.

By installing Anything LLM or Msty, you get a chatbot on your computer. After installation, the tools load an AI language model, the Large Language Model (LLM), into the program.

Which AI model runs in the chatbot depends on the performance of your PC. Operating the chatbot is not difficult if you know the basics. Only the extensive setting options of the tools require expert knowledge.

But even with the standard settings, the chat tools are easy to use. In addition to the AI model and the chatbot, Anything LLM and Msty also offer the embedding model, which reads in your document and prepares it in a local database so that the language model can access it.

More is better: Small AI models are hardly any good

There are AI language models that also run on weak hardware. For the local AI, weak means a PC with only 8GB RAM and a CPU that is already a few years old and does not have a good Nvidia or AMD graphics card.

AI models that still run on such PCs usually have 2 to 3 billion parameters (2B or 3B) and have been simplified by quantization.

This reduces memory requirements and computing power, but also worsens the results. Examples of such variants are Gemma 2 2B or Llama 3.2 3B.

Although these language models are comparatively small, they provide surprisingly good answers to a large number of questions or generate usable texts according to your specifications — completely locally and in an acceptable amount of time.

If you have a good graphics card from Nvidia or AMD, you will get a much faster KIChatbot. This applies to the embedding process and the waiting time for a response. In addition, more sophisticated AI models can usually be used.

IDG

However, when it comes to the local language model taking your documents into account, these small models deliver results that are somewhere between “unusable” and “just acceptable.” How good or bad the answers are depends on many factors, including the type of documents.

In our initial tests with local AI and local documents, the results were initially so poor that we suspected something had gone wrong with the embedding of the documents.

Further reading: Beyond Copilot: 13 helpful AI tools for PC users

Only when we used a model with 7 billion parameters did the responses improve, and when we used the online model ChatGPT 4o on a trial basis, we were able to see how good the responses can be. So it wasn’t the embedding.

In fact, the biggest lever for local AI and own documents is the AI model. And the bigger it is, i.e. the more parameters it has, the better. The other levers such as the embedding model or the chatbot (Anything LLM or Msty) and the vector database play a much, much smaller role.

Embedding & retrieval augmented generation

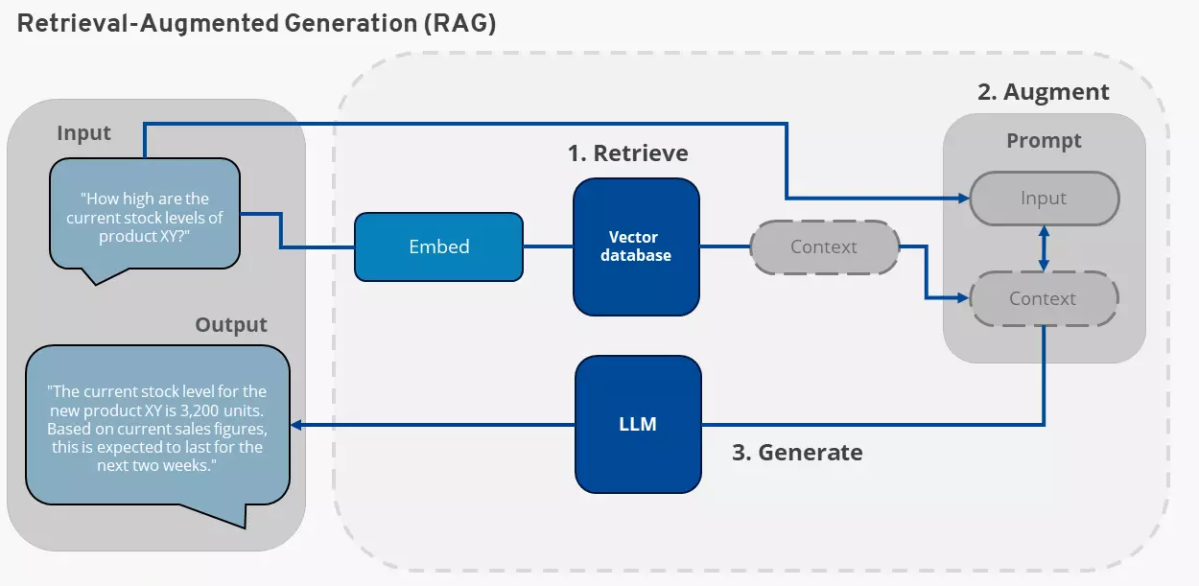

Cloud provider Ionos explains how retrieval augmented generation (RAG) works here. This is the method by which an AI language model takes your local document into account in its responses. Ionos itself offers AI chatbots for its own documents. However, this usually runs entirely in the cloud.

Ionos

Your own data is connected to the AI using a method called embedding and retrieval augmented generation (RAG).

For tools such as Anything LLM and Msty, it works like this: Your local documents are analyzed using an embedding model. This model breaks down the content of the documents into its meaning and stores it in the form of vectors.

Instead of a document, the embedding model can also process information from a database or other knowledge sources.

However, the result is always a vector database that contains the essence of your documents or other sources. The form of the vector database enables the AI language model to find objects in it.

This process is fundamentally different from a word search and a word index. The latter stores the position of an important word in a document. A vector database for RAG, on the other hand, stores which statements are contained in a text.

This means: The question:

"What is on page 15 of my car insurance document?"does not usually work with RAG. This is because the information “page 15” is usually not contained in the vector database. In most cases, such a question causes the AI model to hallucinate. Since it does not know the answer, it invents something.

Creating the vector database, i.e. embedding your own documents, is the first step. The information retrieval is the second step and is referred to as RAG.

In retrieval augmented generation, the user asks the chatbot a question. This question is converted into a vector representation and compared with the data in the vector database of the user’s own documents (retrieve).

The results from the vector database are now transferred to the chatbot’s AI model together with the original question (augment).

The AI model now generates an answer (generate), which is made up of the information from the AI model and the information from the user’s vector database.

Comparison: Anything LLM or Msty?

We have tested the two chatbots Anything LLM and Msty. Both programs are similar. However, they differ significantly in the speed with which they embed local documents, i.e. make them available to the AI. This process is generally time-consuming.

Anything LLM took 10 to 15 minutes to embed a PDF file with around 150 pages in the test. Msty, on the other hand, often took three to four times as long.

We tested both tools with their preset AI models for embedding. For Msty this is “Mixed Bread Embed Large,” for Anything LLM it’s “All Mini LM L6 v2.”

Although Msty requires considerably more time for embedding, it may be worth choosing this tool. It offers good user guidance and provides exact source information when citing. We recommend Msty for fast computers.

Further reading: Does your next laptop really need to be an AI PC?

If you don’t have this, you should first try Anything LLM and check whether you can achieve satisfactory results with this chatbot. The decisive factor is the AI language model in the chatbot anyway. And both tools offer the same range of AI language models.

By the way: Both Anything LLM and Msty allow you to select alternative embedding models. In some cases, however, the configuration becomes more complicated. You can also select online embedding models, for example from Open AI.

You don’t have to worry about accidentally selecting an online embedding model. This is because you need an API key to be able to use it.

Anything LLM: Simple and fast

Install the Anything LLM chatbot. Microsoft Defender Smartscreen may display a warning that the installation file is not secure. You can ignore this by clicking on “Run anyway.”

After installation, select an AI language model in Anything LLM. We recommend Gemma 2 2B to start with. You can replace the selected model with a different one at any time later (see “Change AI language model” below).

Now create an area in the configuration wizard or later by clicking on “New workspace” in which you can import your own documents. Give the workspace a name of your choice and then click on “Save.”

The new workspace now appears in the left bar of Anything LLM. Click on the icon to the left of the cogwheel symbol to import your document for the AI. In the new window, click on “Click to upload or drag & drop” and select your documents.

After a few seconds, they will appear in the list above the button. Click on your document again and select “Move to Workspace,” which will move the documents to the right.

A final click on “Save and Embed” starts the embedding process, which may take some time depending on the size of the documents and the speed of your PC.

Tip: Don’t try to read the last 30 years of PCWorld as a PDF right away. Start with a simple text document and see how long it takes your PC. This way you can quickly assess whether a more extensive scan is worthwhile.

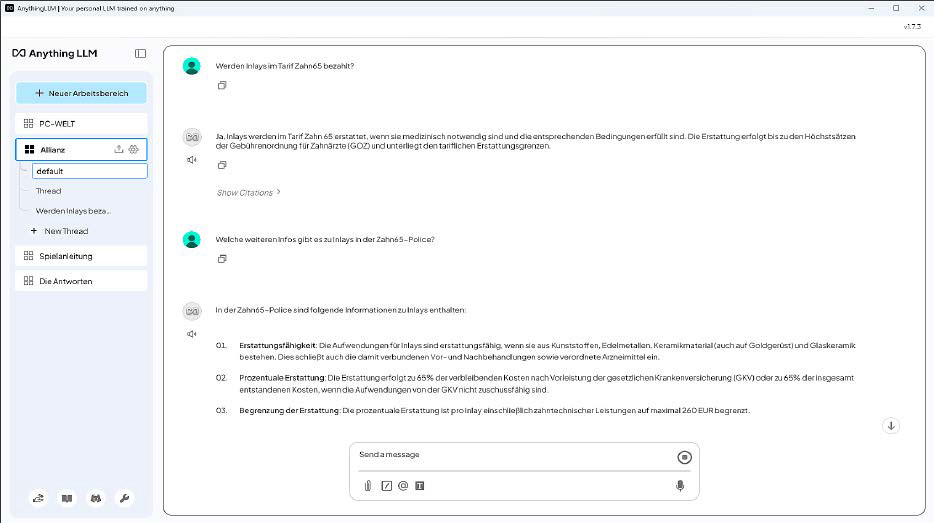

Once the process is complete, close the window and ask the chatbot your first question. In order for the chatbot to take your documents into account, you must select the workspace you have created on the left and then enter your question in the main window at the bottom under “Send a message.”

Change the AI language model: If you would like to select a different language model in Anything LLM, click on the key symbol (“Open Settings”) at the bottom left and then on “LLM.” Under “LLM provider,” select one of the suggested AI models.

The new models from Deepseek are also offered. Clicking on “Import model from Ollama or Hugging Face” gives you access to almost all current, free AI models.

Downloading one of the models can take some time, as they are several GB in size and the download server does not always deliver quickly. If you would like to use an online AI model, select it from the drop-down menu below “LLM provider.”

Tips for using Anything: Some Anything LLM menus are a little tricky. Changes to the settings usually have to be confirmed by clicking on “Save.”

However, the button for this quickly disappears from view on longer configuration pages. This is the case, for example, when changing the AI models under “Open Settings > LLM.”

Anyone who forgets to click the button will probably be surprised that the settings are not applied. It is therefore important to look out for a “Save” button every time you change the configuration.

In addition, the user interface in Anything LLM can be at least partially switched to another language under “Open settings > Customize > Display Language.”

We also switched on the ChatGPT 4o online language model as a test, with perfect results for questions about our supplementary dental insurance contract and other documents.

IDG

Login to add comment

Other posts in this group

It should come as no surprise that students the world over are using

Multi-screen laptops are a thing, and have been a thing for a while.

It might seem that “ChatGPT” is all you ever hear about when discussi