Many users are concerned about what happens to their data when using cloud-based AI chatbots like ChatGPT, Gemini, or Deepseek. While some subscriptions claim to prevent the provider from using personal data entered into the chatbot, who knows if those terms really stand. You also need a stable and fast internet connection to use cloud AI. But if there’s no internet connection, what then? Well, there’s always an alternative.

One solution is to run AI applications locally. However, this requires the computer or laptop to have the right amount of processing power. There’s also an increasing number of standard applications that rely on AI now. But if a laptop’s hardware is optimized for the use of AI, you can work faster and more effectively with AI applications.

Further reading: ‘Vibe coding’ your own apps with AI is easy! 7 tools and tricks to get started

Working with local AI applications makes sense

Running AI applications locally not only reduces dependency on external platforms, but it also creates a reliable basis for data protection, data sovereignty, and reliability. Especially in small companies with sensitive customer information or in private households with personal data, the local use of AI increases trust. Local AI remains usable even if internet services are disrupted or the cloud provider has technical problems.

The reaction speed improves significantly as computing processes are not slowed down by latency times. This enables the use of AI models in real-time scenarios such as image recognition, text generation or voice control without delay.

What’s more, you can learn how to use AI completely free of charge. In many cases, the necessary software is available completely free of charge as an open source solution. Learn how to use AI with the tools and benefit from the use of AI-supported research in your private life too.

Why the NPU makes the difference

Without a specialized NPU, even modern notebooks quickly reach their limits in AI applications. Language models and image processing require enormous computing power that overwhelms conventional hardware. This results in long loading times, sluggish processes and greatly reduced battery life. This is precisely where the advantage of an integrated NPU comes into play.

IDG

The NPU handles the computationally intensive parts of AI processing independently and does not rely on the CPU or GPU. As a result, the system remains responsive overall, even if an AI service is running in the background or AI image processing is in progress. At the same time, the operating temperature remains low, fans remain quiet and the device runs stably, even in continuous operation. For local AI applications, the NPU is therefore not an add-on, but a basic requirement for smooth and usable performance.

NPUs significantly accelerate AI locally once again

As specialized AI accelerators, NPUs enable computationally intensive models to be operated efficiently on standard end devices. This reduces energy consumption compared to purely CPU- or GPU-based approaches and makes local AI interesting in the first place.

An NPU is a special chip for accelerating tasks where conventional processors work inefficiently. NPU stands for “Neural Processing Unit.” Such networks are used in language models, image recognition, or AI assistants. In contrast to a CPU, which flexibly executes various programs, an NPU concentrates on calculations that are constantly performed in the field of AI. This allows it to work significantly faster and more economically.

An NPU takes on precisely those tasks where a CPU reaches its limits. AI applications calculate with a large number of numbers at the same time, often in the form of matrices. These are tables of numbers with rows and columns. In AI, they help to structure and calculate large amounts of data. Texts, images or language are converted into numbers and represented as matrices. This enables an AI model to carry out computing processes efficiently.

NPUs are designed to process many such matrices simultaneously. The CPU processes such arithmetic patterns one after the other, which costs time and energy. An NPU, on the other hand, was specially built to carry out many such operations in parallel.

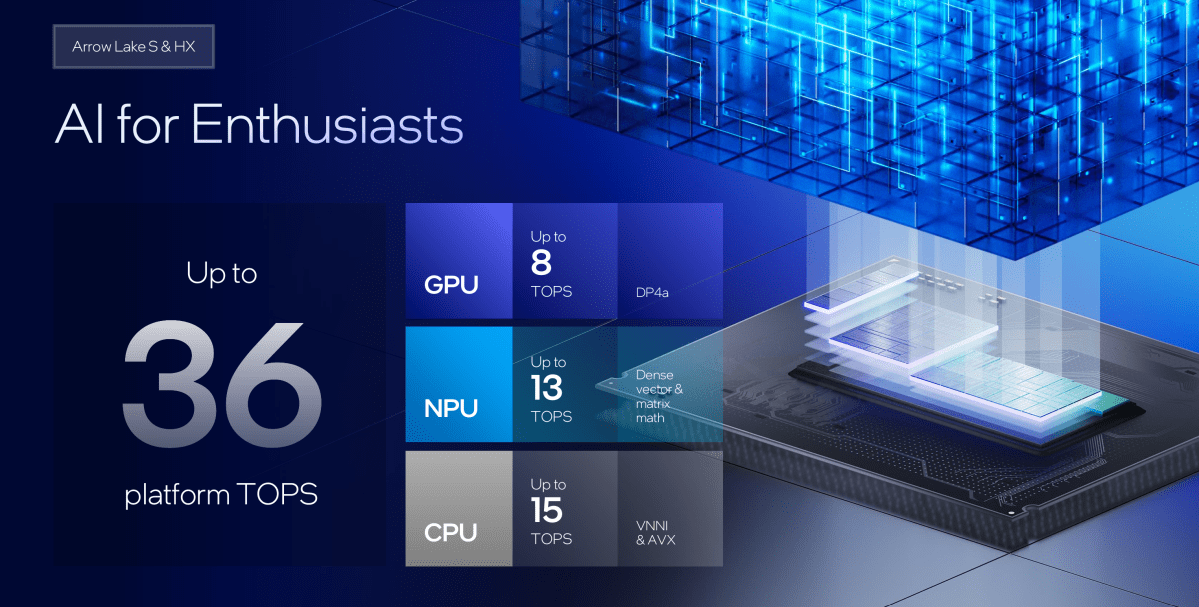

Intel

For users, this means that the NPU processes AI tasks such as voice input, object recognition, or automatic text generation faster and more efficiently. Meanwhile, the CPU remains free for other tasks such as the operating system, internet browser, or office applications. This ensures a smooth user experience without delays or high power consumption. Modern devices such as notebooks with Intel Core Ultra or Qualcomm Snapdragon X Elite already integrate their own NPUs. Apple has also been using similar technology in its chips for years (Apple Silicon M1 to M4).

AI-supported applications run locally and react quickly without transferring data to cloud servers. The NPU ensures smooth operation for image processing, text recognition, transcription, voice input or personalized suggestions. At the same time, it reduces the utilization of the system and saves battery power. It is therefore worthwhile opting for laptops with an NPU chip, especially if you are working with AI solutions. These do not have to be special AI chatbots. More and more local applications and games are using AI, even Windows 11 itself.

YouTube

Open source brings AI locally to your computer: Ollama and Open Web UI

Open source solutions such as Ollama allow you to run LLMs on a notebook with an NPU chip free of charge. LLM stands for “Large Language Model”. LLMs form the heart of AI applications. They enable computers to understand natural language and react to it in a meaningful way.

Anyone using an AI to write texts, summarize emails, or answer questions is interacting with an LLM. The AI models help with formulating, explaining, translating, or correcting. Search engines, language assistants, and intelligent text editors also use LLMs in the background. The decisive factor here is not only the performance of the model, but also where it runs. If you operate an LLM locally, you can connect local AI applications to this local model. This means you are no longer dependent on the internet.

Ollama enables the operation of numerous LLMs, including free ones. These include DeepSeek-R1, Qwen 3, LLama 3.3, and many others. You simply install Ollama on your PC or laptop with Windows, Linux, and macOS. Once installed, you can operate Ollama via the command line in Windows or the terminal in macOS and Linux. Ollama provides the framework through which you can install various LLMs on your PC or notebook.

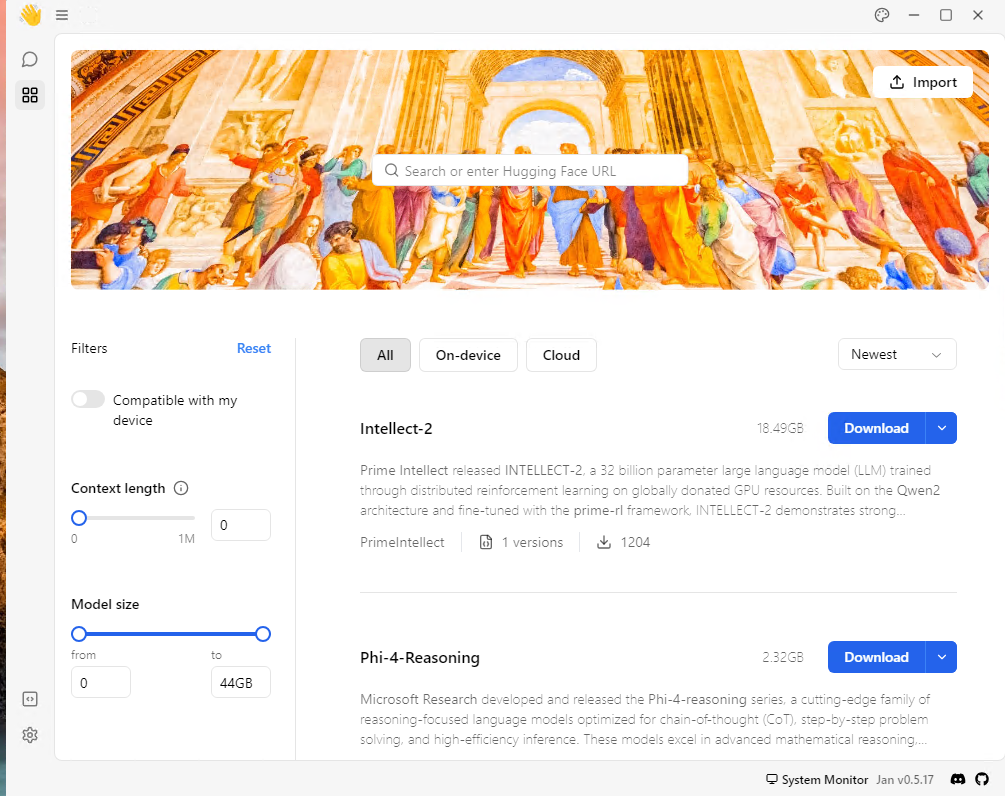

To work with Ollama in the same way as you are used to with AI applications such as ChatGPT, Gemini, or Microsoft Copilot, you also need a web front end. Here you can rely on the OpenWeb UI solution, which is also free of charge. This is also available as a free, open-source tool.

What other local AI tools are available?

As an alternative to Ollama with Open Web UI, you can also use the more limited tool GPT4All. Another option in this area is Jan.ai, which provides access to well-known LLMs such as DeepSeek-R1, Claude 3.7, or GPT 4 from OpenAI. To do this, install Jan.ai, start the program, and select the desired LLM.

Thomas Joos

Please note, however, that model downloads can quickly reach 20 GB or more. Additionally, it only makes sense to use them if your computer’s hardware is optimized for AI, ideally with an existing NPU.

https://www.pcworld.com/article/2833430/running-ai-locally-on-your-laptop-what-you-need-to-know.html

Jelentkezéshez jelentkezzen be

EGYÉB POSTS Ebben a csoportban

Prime Day is a prime (har har) time to find some amazing tech deals.

Ditch the multiple chargers and opt for an Anker charging station; it

Connecting to a VPN server hides your traffic by rerouting it through

I regret to inform you, readers, that OLED gaming monitors are a litt

Most gaming laptops with RTX 4050 graphics tend to run between $800 a

Nothing beats a full-on security system when it comes to protecting t