Modern notebooks with integrated AI hardware are changing the way artificial intelligence is used in everyday life. Instead of relying on external server farms, these large language models, image generators, or transcription systems run directly on the user’s own device.

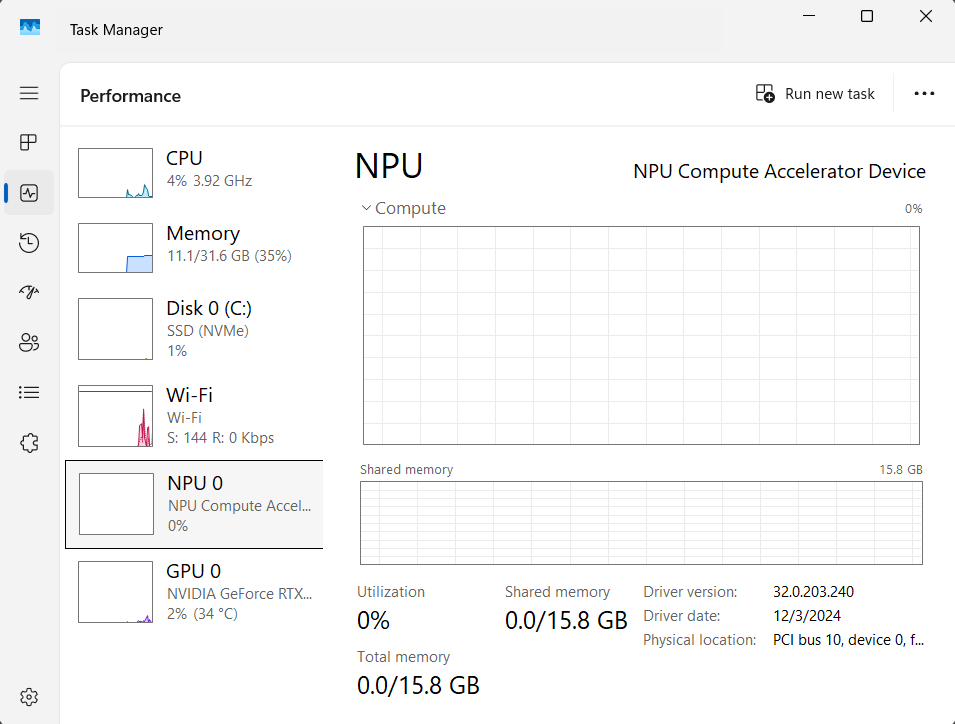

This is made possible by the combination of powerful CPUs, dedicated graphics processors and, at the center of this development, a Neural Processing Unit (NPU). An NPU is not just an add-on, but a specialized accelerator designed precisely for the calculation of neural networks.

It enables offline AI tools such as GPT4All or Stable Diffusion not only to start, but also to react with high performance, low energy consumption and constant response time. Even with complex queries or multimodal tasks, the working speed remains stable. The days when AI was only conceivable as a cloud service are now over.

Work where others are offline

As soon as the internet connection is interrupted, classic laptops begin to idle. An AI PC, on the other hand, remains operational, whether in airplane mode above the clouds, deep in the dead zones of rural regions, or in an overloaded train network without a stable network.

In such situations, the structural advantage of locally running AI systems becomes apparent. Jan.ai or GPT4All can be used to create, check and revise texts, intelligently summarize notes, pre-formulate emails and categorize appointments.

Foundry

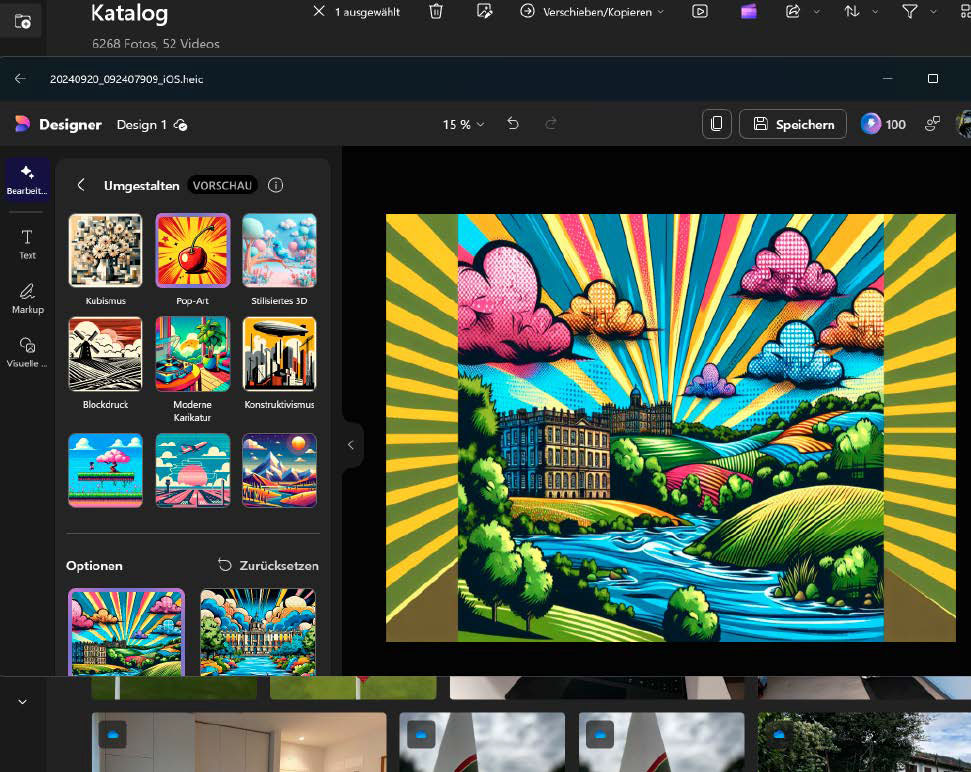

With AnythingLLM, contracts or meeting minutes can be searched for keywords without the documents leaving the device. Creative tasks such as creating illustrations via Stable Diffusion or post-processing images with Photo AI also work, even on devices without a permanent network connection.

Even demanding projects such as programming small tools or the automated generation of shell scripts are possible if the corresponding models are installed. For frequent travelers, project managers, or creative professionals, this creates a comprehensive option for productive working, completely independent of infrastructure, network availability, or cloud access. An offline AI notebook does not replace a studio, but it does prevent downtime.

Sensitive content remains local

Data sovereignty is increasingly becoming a decisive factor in personal and professional lives. Anyone who processes business reports, develops project ideas, or analyzes medical issues cannot afford to have any uncertainties when processing data.

Public chatbots such as Gemini, ChatGPT, or Microsoft Copilot are helpful, but are not designed to protect sensitive data from misuse or unwanted analysis.

Local AI solutions, on the other hand, work without transmitting data to the internet. The models used, such as LLaMA, Mistral or DeepSeek, can be executed directly on the device without the content leaving the hardware.

This opens up completely new fields of application, particularly in areas with regulatory requirements, such as healthcare, in a legal context, or in research. AnythingLLM goes one step further. It combines classic chat interaction with a local knowledge base of Office documents, PDFs and structured data. This turns voice AI into an interactive analysis tool for complex amounts of information, locally, offline and in compliance with data protection regulations.

NPU notebooks: new architecture, new possibilities

While traditional notebooks quickly reach their thermal or energy limits in AI applications, the new generation of copilot PCs rely on specialized AI hardware. Models such as the Surface Laptop 6 or the Surface Pro 10 integrate a dedicated NPU directly into the Intel Core Ultra SoC, supplemented by high-performance CPU cores and integrated graphics.

The advantages are evident in typical everyday scenarios. Voice input via Copilot, Gemini or ChatGPT can be analyzed without delay, image processing with AI tools takes place without cloud rendering, and even multimodal tasks, such as analyzing text, sound, and video simultaneously run in real time. Microsoft couples the hardware closely with the operating system.

IDG

Windows 11 offers native NPU support, for example for Windows Studio Effects, live subtitles, automatic text recognition in images or voice focus in video conferences. The systems are designed so that AI does not function as an add-on, but is an integral part of the overall system as soon as it is switched on, even without an internet connection.

Productive despite dead zones

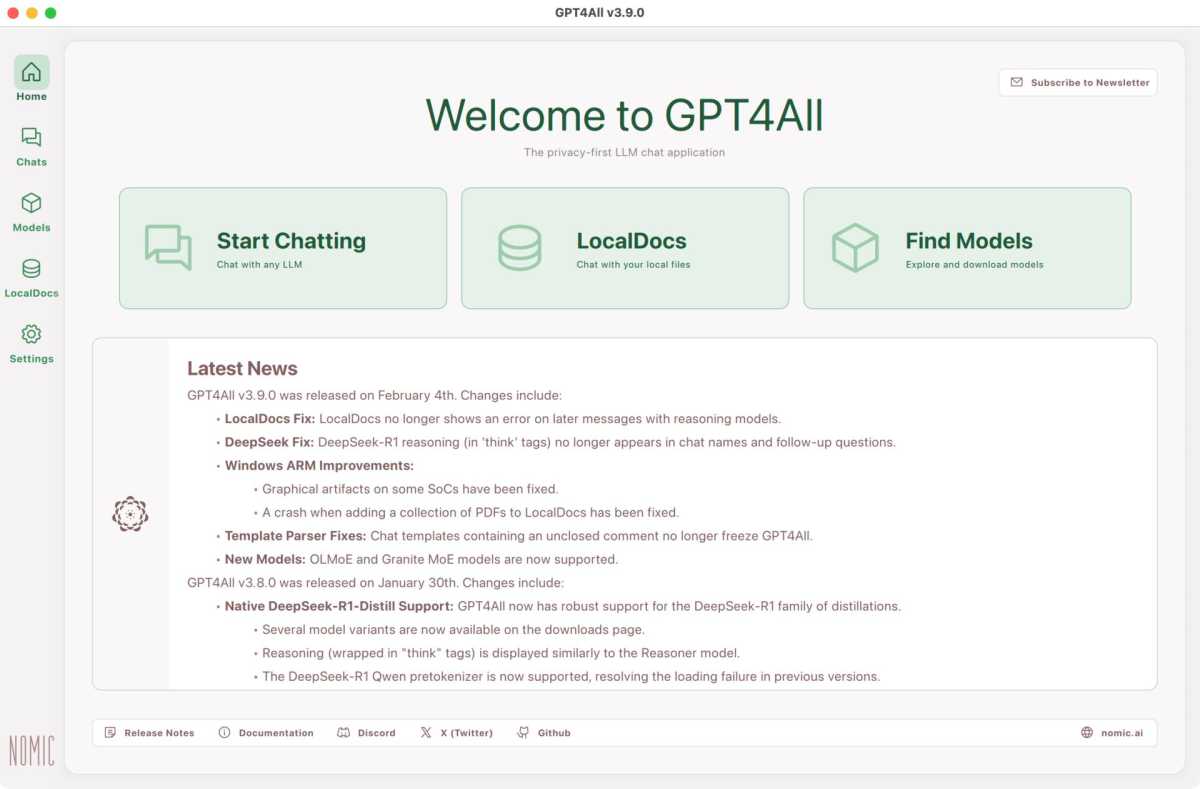

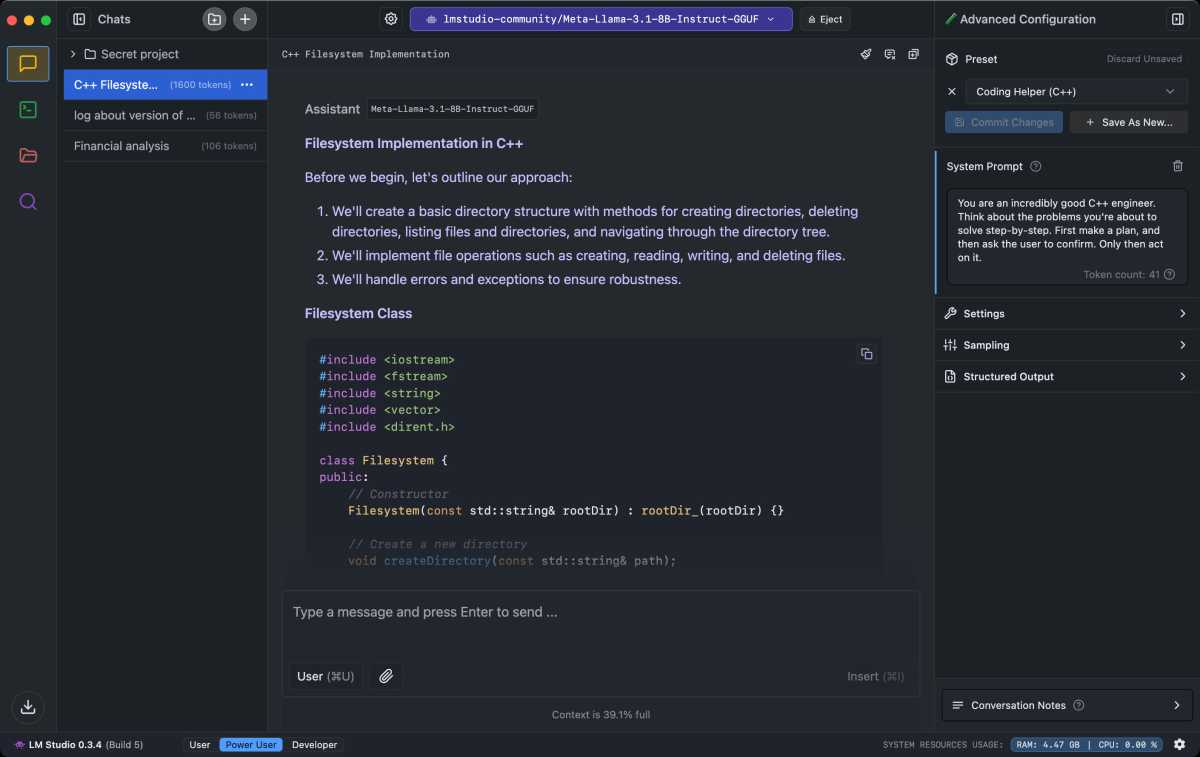

The tools for offline AI are now fully developed and stable in everyday use. GPT4All from Nomic AI is particularly suitable for beginners, with a user-friendly interface, uncomplicated model management and support for numerous LLMs. Ollama is aimed at technically experienced users and offers terminal-based model management with a local API connection, ideal for providing your own applications or workflows directly with AI support. LM Studio, on the other hand, is characterized by its GUI focus. Models from Hugging Face can be simply be searched in the app, downloaded, and activated with a click.

The LM Studio chatbot not only provides access to a large selection of AI models from Huggingface.com, but also allows the AI models to be fine-tuned. There is a separate developer view for this.

LM Studio

Jan.ai is particularly versatile. The minimalist interface hides a highly functional architecture with support for multiple models, context-sensitive responses, and elegant interaction.

Local tools are also available in the creative area. With suitable hardware, Stable Diffusion delivers AI-generated images within a few seconds, while applications such as Photo AI automatically improve the quality of screenshots or video frames. A powerful NPU PC turns the mobile device into an autonomous creative studio, even without Wi-Fi, cloud access, or GPU calculation on third-party servers.

What counts on the move

The decisive factor for mobile use is not just whether a notebook can run AI, but how confidently it can do this offline. In addition to the CPU and GPU, the NPU plays a central role. It processes AI tasks in real time, while at the same time conserving battery power and reducing the load on the overall system.

Devices such as the Galaxy Book with an RTX 4050/4070 or the Surface Pro 10 with a Intel Core Ultra 7 CPU demonstrate that even complex language models such as Phi-2, Mistral, or Qwen run locally, with smooth operation and without the typical latencies of cloud services.

Copilot as a system assistant complements this setup, provided the software can access it. When travelling, you can compose emails, structure projects, prepare images or generate text modules, regardless of the network. Offline AI on NPU notebooks also transforms the in-flight restaurant, the waiting gate, or the remote holiday home into a productive workspace.

Requirements and limitations

The hardware requirements are not trivial however. Models such as LLaMA2 or Mistral require several gigabytes of RAM, 16 GB RAM is the lower minimum. Those working with larger prompts or context windows should plan for 32 or 64 GB. The SSD memory requirement also increases, as many models use between 4 and 20 GB.

NPUs take care of inference, but depending on the tool, additional GPU support may be necessary, for example for image generation with Stable Diffusion.

Sam Singleton

Integration into the operating system is also important. Copilot PCs ensure deep integration between hardware, AI libraries, and system functions. Anyone working with older hardware will have to accept limitations.

The model quality also varies. Local LLMs do not yet consistently reach the level of GPT-4, but they are more controllable, more readily available and more data protection-friendly. They are the more robust solution for many applications, especially when travelling.

Offline AI under Linux: openness meets control

Offline AI also unfolds its potential on Linux systems—often with even greater flexibility. Tools such as Ollama, GPT4All, or LM Studio offer native support for Ubuntu, Fedora, and Arch-based distributions and can be installed directly from the terminal or as a flatpack. The integration of open models such as Mistral, DeepSeek, or LLaMA works smoothly, as many projects rely on open source frameworks such as GGML or llama.cpp.

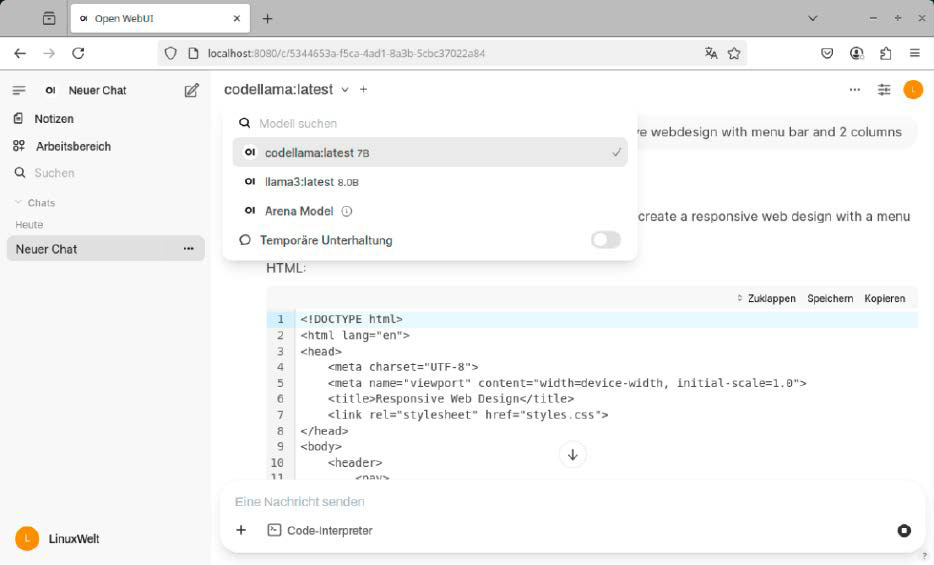

Browser interface for Ollama: Open-Web-UI is quickly set up as a Python program or in a Docker container and provides a user interface.

IDG

Anyone working with Docker or Conda environments can build customized model set-ups, activate GPU support or fine-tune inference parameters. This opens up various scenarios, especially in the developer environment: Scripting, data analysis, code completion, or testing your own prompt structures.

In conjunction with tiling desktops, reduced background processes and optimized energy management, the Linux notebook becomes a self-sufficient AI platform, without any vendor lock-in, with maximum control over every file and every computing operation.

Offline instead of delivered

Offline AI on NPU notebooks is not a stopgap measure, but a paradigm shift. It offers independence, data protection, and responsiveness, even in environments without a network. Thanks to specialized chips, optimized software, and well thought-out integration in Windows 11 and the latest Linux kernel, new freedom is created for data-secure analyses, mobile creative processes, or productive work beyond the cloud.

The prerequisite for this is an AI PC that not only provides the necessary performance, but also anchors AI at a system level. Anyone relying on reliable intelligence on the move should no longer hope for the cloud, but choose a notebook that makes it superfluous.

Jelentkezéshez jelentkezzen be

EGYÉB POSTS Ebben a csoportban

Copilot Vision is one of those Windows features that deserves more at

Over the past several months, the question surrounding Google’s next

You probably already have a trusty flash drive in your office drawer,

With the latest update to Firefox 142, Mozilla is once again introduc

Until now, it was always possible to disable automatic app

Microsoft said Wednesday that it’s working to remove one of the frust