Despite billions of dollars of AI investment, Google’s Gemini has always struggled with image generation. The company’s Flash 2.5 model has long felt like a sidenote in comparison to far better generators from the likes of OpenAI, Midjourney, and Ideogram.

That all changed last week with the release of Google’s new Nano Banana image AI. The wonkily named new system is live for most Gemini users, and its capabilities are insane.

To be clear, Nano Banana still sucks at generating new AI images.

But it excels at something far more powerful, and potentially sinister—editing existing images to add elements that were never there, in a way that’s so seamless and convincing that even experts like myself can’t detect the changes.

That makes Nano Banana (and its inevitable copycats) both invaluable creative tools and an existential threat to the trustworthiness of photos—both new and historical.

In short, with tools like this in the world, you can never trust a photo you see online again.

Come fly with me

As soon as Google released Nano Banana, I started putting it through its paces. Lots of examples online—mine included—focus on cutesy and fun uses of Nano Banana’s powerful image-editing capabilities.

In my early testing, I placed my dog, Lance, into a Parisian street scene filled with piles of bananas and ">showed how I would look wearing a Tilley Airflo hat. (Answer: very good.)

Immediately, though, I saw the system’s potential for generating misinformation. To demonstrate this on a basic level, I tried editing my standard professional headshot to place myself into a variety of scenes around the world.

Here’s Nano Banana’s rendering of me on a beach in Maui.

If you’ve visited Wailea Beach, you’ll recognize the highly realistic form of the West Maui Mountains in soft focus in the background.

I also placed myself atop Mount Everest. My parka looks convincing—the fact that I’m still wearing my Travis Matthew polo, less so.

200’s a crowd

These personal examples are fun. I’m sure I could post the Maui beach photo on social media and immediately expect a flurry of comments from friends asking how I enjoyed my trip.

But I was after something bigger. I wanted to see how Nano Banana would do at producing misinformation with potential for real-life impact.

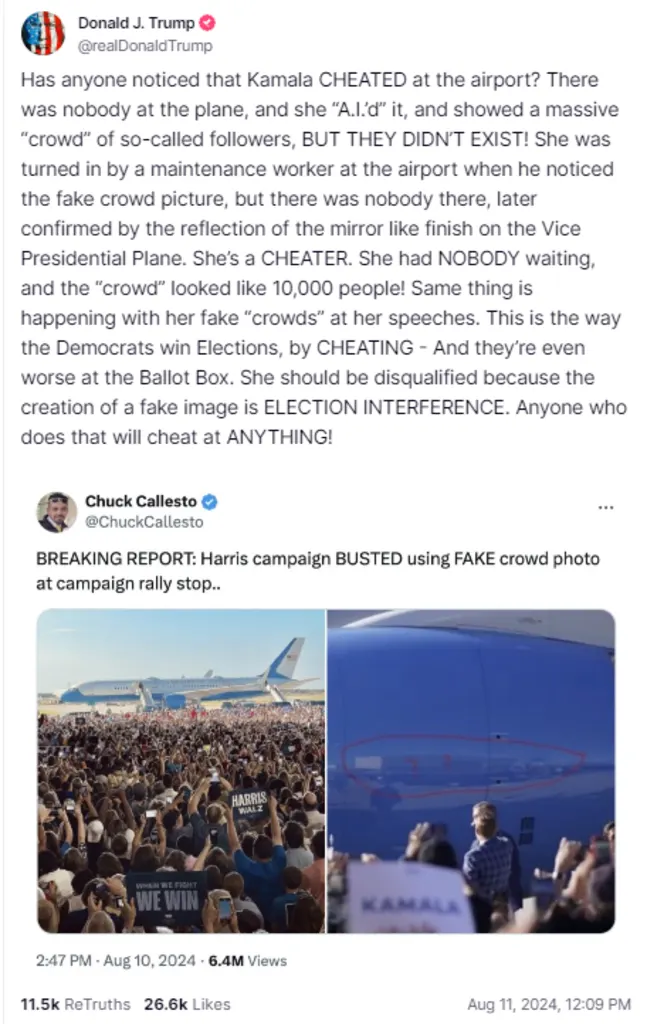

During last year’s Presidential elections here in America, accusations of AI fakery flew between both candidates. In an especially infamous example, now-President Donald Trump accused Kamala Harris’s campaign of using AI to fake the size of a crowd during a campaign rally.

All reputable accounts of the event support the fact that photos of the Harris rally were real. But I wondered if Nano Banana could create a fake visual of a much smaller crowd, using the real rally photo as input.

Here’s the result:

The edited version looks extremely realistic, in part because it keeps specific details from the actual photo, like the people in the foreground holding Harris-Walz signs and phones.

But the fake image gives the appearance that only around 200 people attended the event and were densely concentrated in a small space far from the plane, just as Trump’s campaign claimed.

If Nano Banana had existed at the time of the controversy, I could easily see an AI-doctored photo like this circulating on social media, as “proof” that the original crowd was smaller than Harris claimed.

Before, creating a carefully altered version of a real image with tools like Photoshop would have taken a skilled editor days—too long for the result to have much chance of making it into the news cycle and altering narratives.

Now, with powerful AI editors, a bad actor wishing to spread misinformation could convincingly alter photos in seconds, with no budget or editing skills needed.

Fly me to the moon

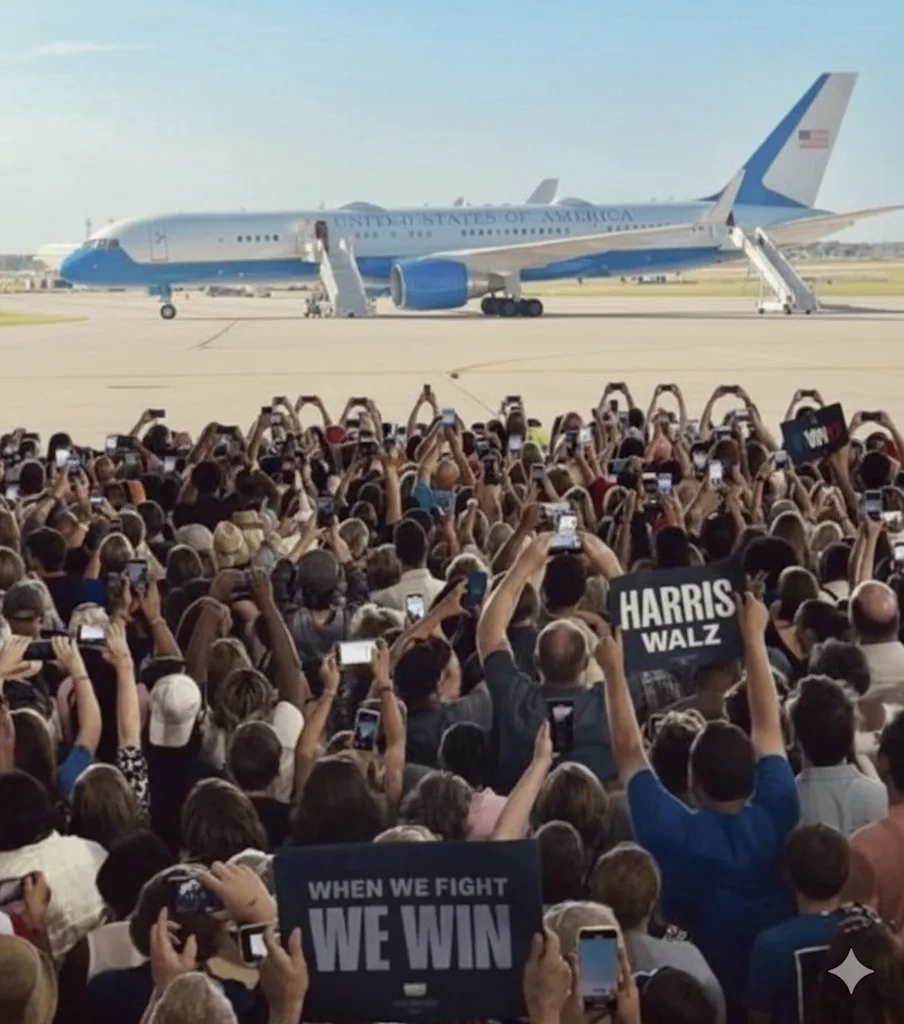

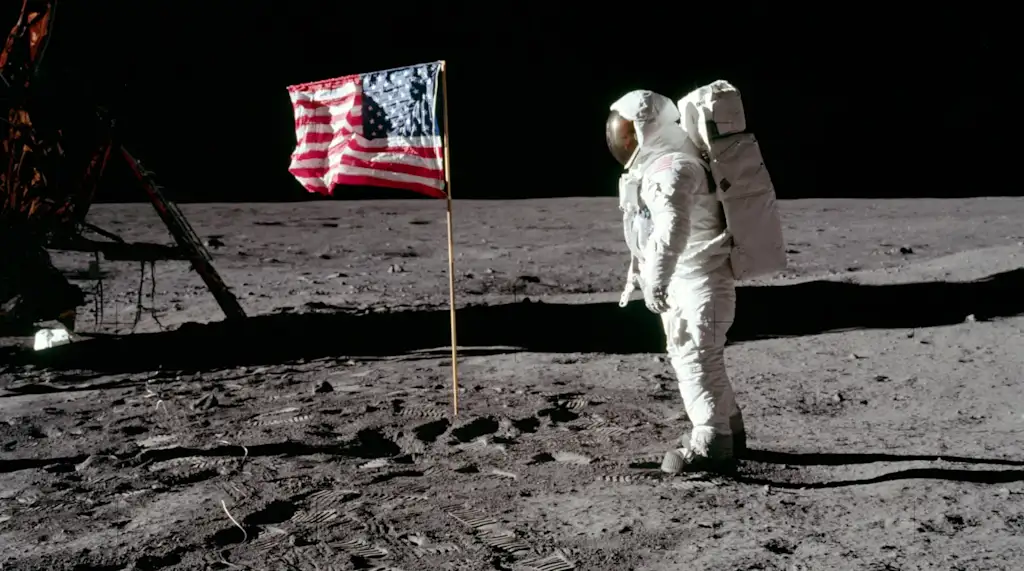

Having tested an example from the present day, I decided to turn my attention to a historical event that has yielded countless conspiracy theories: the 1969 moon landing.

Conspiracists often claim that the moon landing was staged in a studio. Again, there’s no actual evidence to support this. But I wondered if tools like Nano Banana could fake some.

To find out, I handed Nano Banana a real NASA photo of astronaut Buzz Aldrin on the moon.

I then asked it to pretend the photo had been faked, and to show it being created in a period-appropriate photo studio.

The resulting image is impressive in its imagined detail. A group of men (it was NASA in the 1960s—of course they’re all men!) in period-accurate clothing stand around a soundstage with a fake sky backdrop, fake lunar regolith on the floor, and a prop moon lander.

In the center of the regolith stands an actor in a space suit, his stance perfectly matching Aldrin’s slight forward lean in the actual photo. Various flats and other theatrical equipment are unceremoniously stacked to the sides of the room.

As a real-life professional photographer, I can vouch for the fact that the technical details in the Nano Banana’s image are spot-on. A giant key light above the astronaut actor stands in for the bright, atmosphere-free lighting of the lunar surface, while various lighting instruments provide shadows perfectly matching the lunar lander shadow in the real image.

A photographer crouches on the floor, capturing the imagined astronaut actor from an angle that would indeed match the angle in the real-life photograph. Even the unique lighting on the slightly crumpled American flag—with a small circular shadow in the middle of the flag—matches the real image.

In short, if you were going to fake the moon landing, Nano Banana’s imagined soundstage would be a pretty reasonable photographic setup to use.

If you posted this AI photo on social media with a caption like “REVEALED! Deep in NASA’s archive, we found a photo that PROVES the moon landing was staged. The Federal Government doesn’t want you to see this COVER UP,” I’m certain that a critical mass of people would believe it.

But why stop there? After using Nano Banana to fake the moon landing, I figured I’d go even further back in history. I gave the system the Wright Brothers’ iconic 1903 photo of their first flight at Kitty Hawk, and asked the system to imagine that it, too, had been staged.

Sure enough, Nano Banana added a period-accurate wheeled stand to the plane.

Presumably, the plane could have been photographed on this wheeled stand, which could then be masked out in the darkroom to yield the iconic image we’ve all seen reprinted in textbooks for the last century.

Believe nothing

In many ways, Nano Banana is nothing new. People have been doctoring photos for almost as long as they’ve been taking them.

An iconic photo of Abraham Lincoln from 1860 is actually a composite of Lincoln’s head and the politician John Calhoun’s much more swole body, and other examples of historical photographic manipulation abound.

Still, the ease and speed with which Nano Banana can alter photos is new. Before, creating a convincing fake took skill and time. Now, it takes a cleverly written prompt and a few seconds.

To their credit, Google is well aware of these risks, and is taking important steps to defend against them.

Each image created by Nano Banana comes with an (easy to remove) physical watermark in the lower right corner, as well as a (harder to remove) SynthID digital watermark invisibly embedded directly into the image’s pixels.

This digital watermark travels with the image, and can be read with special software. If a fake Nano Banana image started making the rounds online, Google could presumably scan for its embedded SynthID and quickly confirm that it was a fake. They could likely even trace its provenance to the Gemini user that created it.

Google scientists have told me that the SynthID can survive common tactics that people use to obscure the origin of an image. Cropping a photo, or even taking a screenshot of it, won’t remove the embedded SynthID.

Google also has a robust and nuanced set of policies governing the use of Nano Banana. Creating fake images with the intent to deceive people would likely get a user banned, while creating them for artistic or research purposes, as I’ve done for this article, is generally allowed.

Still, once a groundbreaking new AI technology rolls out from one provider, others quickly copy it. Not all image generation companies will be as careful about provenance and security as Google.

The (rhinestone-studded, occasionally surfing) cat is out of the bag; now that tools like Nano Banana exist, we need to assume that every image we see online could have been created with one. Nano Banana and its ilk are so good that even photographic experts like myself won’t be able to reliably spot its fakes.

As users, we therefore need to be consistently skeptical of visuals. Instead of trusting our eyes as we browse the Internet, our only recourse is to turn to reputation, provenance, and

Connectez-vous pour ajouter un commentaire

Autres messages de ce groupe

I was reading funding news last week, and I came to a big realization: Andreessen Horowitz is not a venture capital fund.

A lot of people are thinking it. So there, I said it.

A post circulating on Facebook shows a man named Henek, a violinist allegedly forced to play in the concentration camp’s orchestra at Auschwitz. “His role: to play music as fellow prisoners

In the first half of 2025, she racked up over 55 million views on TikTok and 4 mil

Apple’s annual iPhone event is happening next week, when the company is

What do a yoga instructor, a parking garage attendant, and an influencer have in common? They are all now exempt from paying income tax on their tips under President Donald Trump’s “Big Beautiful

TikTok gave us slang like rizz, while X popularized ratio and