When people think about AI, they often think about models that run in the cloud like ChatGPT or Google Gemini. But there’s an incredibly simple way of running local AI on your PC that Microsoft just implemented.

Microsoft announced Microsoft Foundry Local this past week at its Build conference. It’s basically a command line tool that runs LLMs locally on your machine. Although it’s initially targeted at developers, it’s one of the easiest ways of trying out local AI simply because it does everything for you. And it does something else, too: it optimizes your PC.

get windows 11 pro for cheap

Windows 11 Pro

One of the coolest features about Windows that you don’t use is what’s known as “winget,” which is like DoorDash for applications. Instead of navigating to a website or the Microsoft Store, finding the download link, and telling Windows where everything should go, you simply open a command line and “winget” what you want. Windows and Microsoft just does everything else for you, automatically. You don’t need to log in at a third-party website, either.

Foundry AI Local works in the same way. All you need to do is basically cut and paste two commands from this article, and you’ll be up and running in no time. Here’s how to do it, with a helping hand from Microsoft. Microsoft does not say that you need a dedicated GPU or NPU, but it does help. Essentially, you’ll need Windows 10 or 11, at least 8GB of RAM and 3GB of storage (16GB RAM recommended alongside 15GB of disk space). A Copilot+ PC is optional, but you’ll benefit from a Qualcomm Snapdragon X Elite processor, an Nvidia RTX 2000 series, an AMD Radeon 6000 series GPU or newer.

Mark Hachman / Foundry

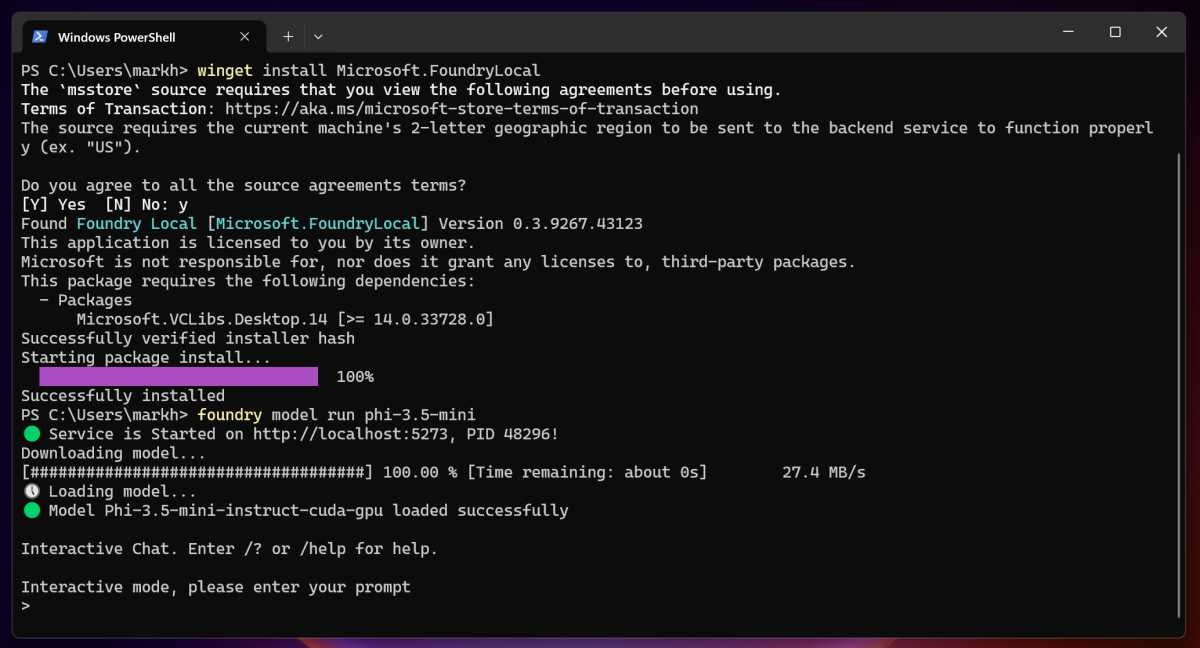

To use Foundry Local, first you’ll want to…

1.) Open a command-line terminal. Just type the Windows key and start typing “terminal”. You should see the Windows Terminal app pop up in list of suggested apps, although it may also appear as Windows PowerShell. It doesn’t really matter.

2.) At the prompt, type:

winget install Microsoft.FoundryLocal

You’ll have to wait a minute or two while the necessary files download. But Microsoft will handle everything for you.

3.) Microsoft suggests running the Phi-3.5-mini model, which is a good choice: it’s small, pretty quick to download, and will probably give you a fast response. To download and run it, type:

foundry model run phi-3.5-mini

That’s all you need to do. You’ll then see the Terminal app basically tell you to enter a prompt such as “Is the sky blue on Mars?” or something else.

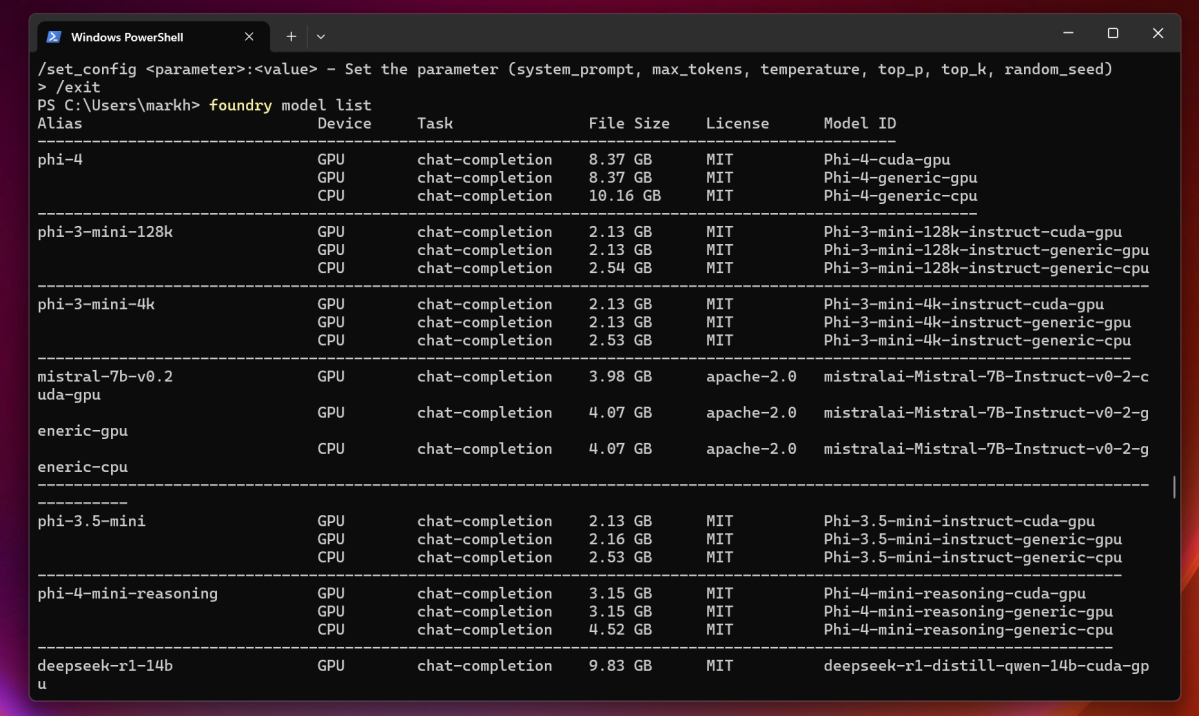

4.) You don’t have to stick with just the Phi-3.5-mini model, either. You can type

foundry model listand get a list of available models. Just swap “phi-3.5-mini” with one of the available models and you’re good to go. (Use the model name from the left-hand column, but don’t sweat it — it seemed to know what I wanted.)

Again, what’s nice about Foundry Local AI is that it’s local to your PC — although the Phi-mini model insisted that it was communicating with Microsoft to process the information. (To double-check, I put my PC into airplane mode.) It’s also quick and efficient, in part because Foundry AI Local downloads the best model for your PC. If you have an available NPU or GPU, it picks the right version.

Foundry AI Local is designed for developers, so you can branch out and convert other models to something that you can run on your PC or even hypothetically tap into “agents” to perform tasks. The example videos I viewed suggested using things like extracting text using a Text Extractor widget, which is being built into the Windows Snipping Tool. You may has well use that instead.

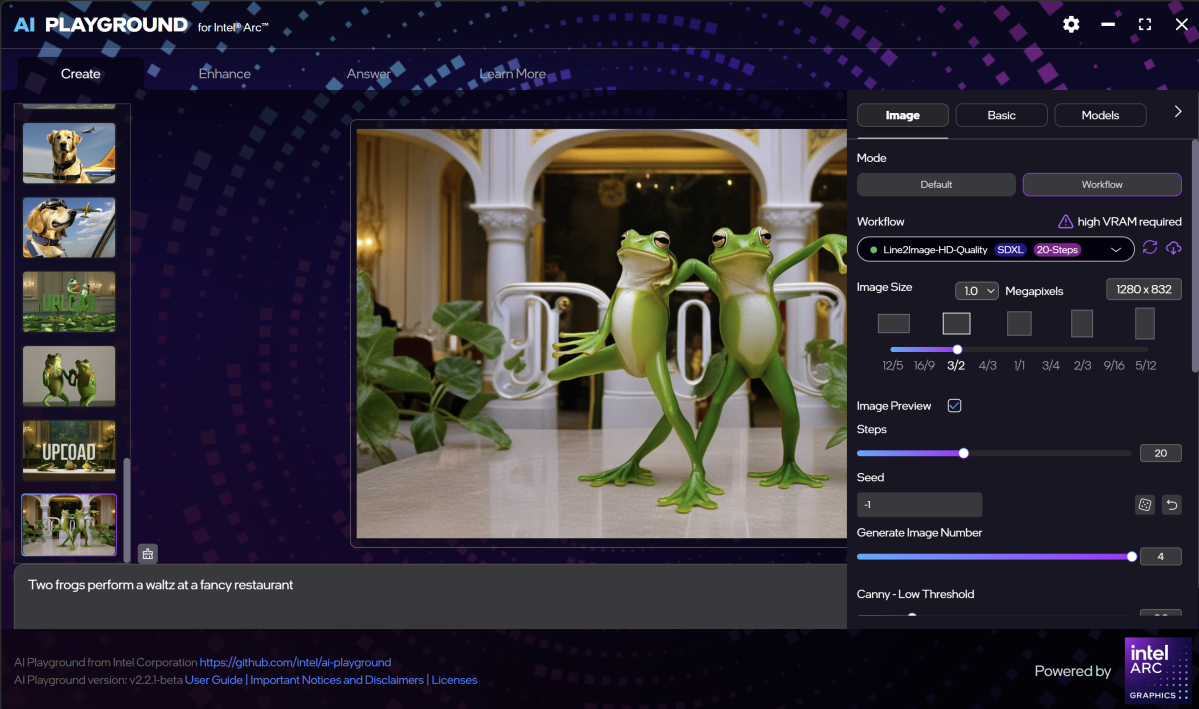

I’ve argued before that hardware makers and chipmakers need to invest in local AI app development. Intel’s AI Playground does just that and it’s simple and easy to use. But Intel has restricted it to a subset of its own processor lineup, and at the end of the day, it’s optimized just for Intel processors.

Mark Hachman / Foundry

Microsoft Foundry AI Local is open to everyone, but it’s also just an LLM chatbot for now. That’s handy, and Microsoft has really just begun work on it. Could we see art added to it? The ability to upload and train the model on your own documents? Who knows.

I’m not here to tell you that Foundry AI Local is better than ChatGPT, Gemini, or Copilot running in the cloud. It’s not. Foundry AI Local is a local text-based application that can’t do anything more than a bunch of available cloud-based services already can. But it is so very quick and easy to run, and Microsoft is quietly building it into Windows. That might bear fruit in the future.

https://www.pcworld.com/article/2792129/windows-11s-sneaky-new-ai-tool-is-a-game-changer.html

Accedi per aggiungere un commento

Altri post in questo gruppo

For a while now, it’s been possible to use handheld gaming controller

Laying down the better part of a grand for the newest, most powerful

You likely have a smart assistant on your phone, a robot vacuum

Researcher IDC has unexpectedly concluded that the current administra

If you mostly work, stream, or browse from home, then you need a dece

The weather is warming up and we’re all dreaming about days loun