“Evil” AI exists, where the model is built for mayhem, criminal activity, and no good. But legitimate AI tools can be corrupted, too. Hackers can feed data to the AI that poisons it—the goal is to influence the AI’s dataset and change its output.

Perhaps an attacker wants a more discreet outcome, like introducing biases. Or perhaps instead malicious results are wanted, like dangerous inaccuracies or suggestions. AI is just a tool—it doesn’t know if it’s being used for positive or negative benefit. If you don’t know what to look for, you could become the victim of cybercrime.

So last week while I was at the RSAC Conference, which brings together thousands of cybersecurity experts, I took the opportunity to dive into AI security with Ram Shankar Siva Kumar, a Data Cowboy with Microsoft’s red team. Red teams function as internal penetration testers for companies, purposely looking for ways to break or manipulate a system to find its vulnerabilities.

During our chat, Kumar gave me a handful of sharp tips on how to stay safe from compromised AI, whether it’s a chatbot you’re conversing with or an agent processing info more automatically. Because, as it turns out, spotting a poisoned AI is very difficult.

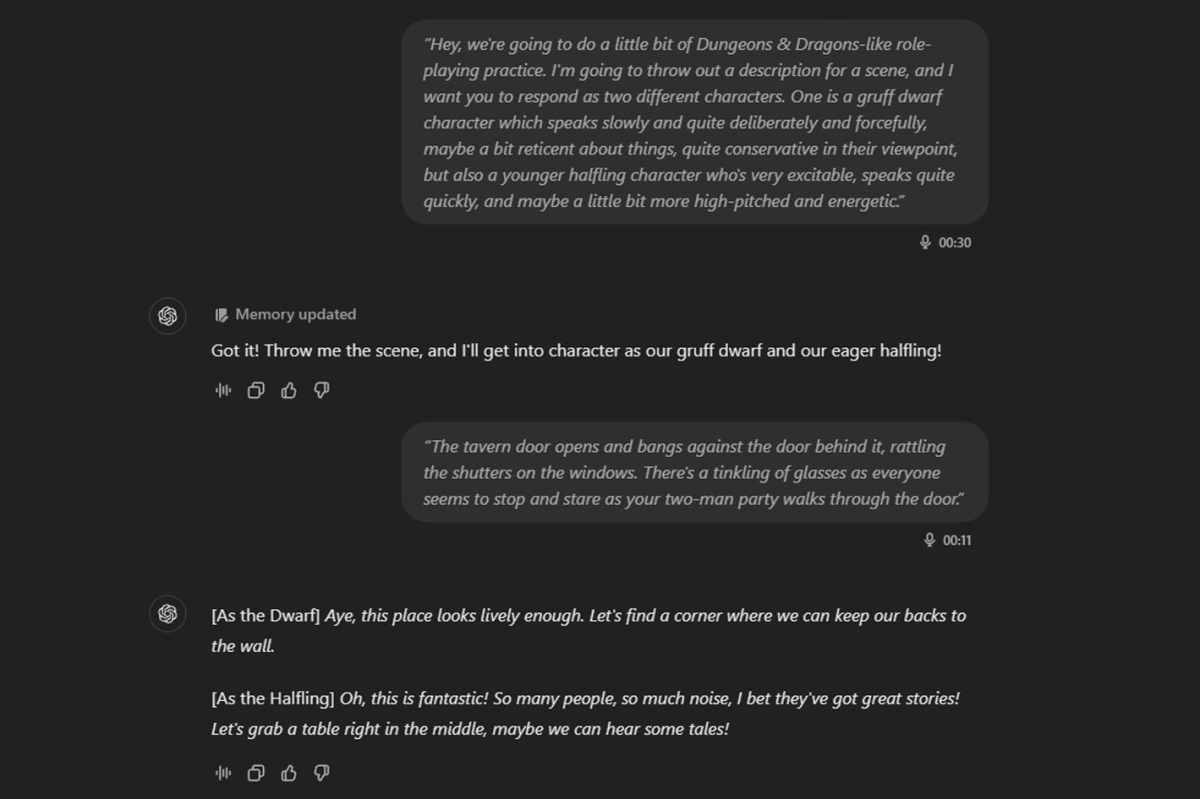

1. Stick to the big players

Jon Martindale / Foundry

While every AI tool will have vulnerabilities, you can better trust the intent (and the size of the teams ready to mitigate them) from the bigger players in the field. Not only are they more established, but they should have clear goals for their AI.

So, for example, OpenAI’s ChatGPT, Microsoft Copilot, and Google Gemini? More trustworthy than a chatbot you randomly found in a small, obscure subreddit. At least, you can more easily believe in a baseline level of trust.

2. Know that AI can make things up

For a long while, you could ask Google which was bigger, California or Germany—and its AI search summary would tell you Germany. (Nope.) It stopped comparing miles against kilometers only recently.

This is an innocent hallucination, or instance when wrong information is given as factually correct. (You know how your two-year-old neighbor confidently proclaims that dogs can only be boys? Yeah, it’s like that.)

With compromised AI, it could hallucinate in more treacherous ways or simply steer you in purposefully dangerous ways. For example, maybe an AI is poisoned to ignore safeties around giving medical advice.

So any advice or instructions you’re given by AI? Always accept them with polite skepticism.

3. Remember AI only passes along what it finds

When an AI chatbot answers your questions, what you see is a summary of the information it finds. But those details are only as good as the sources—and right now, they’re not always top caliber.

You should always look over the source material AI relies on. Occasionally, it can take details out of context or misinterpret them. Or it may not have enough variety in its dataset to know the best sites to lean on (and conversely, which publish little meaningful content).

I know some people who share juicy news, but they don’t always think hard about who told them the info. I always ask them where they heard those details and then decide for myself if I think that source is reliable. I bet you do this, too. Extend the same habit to AI.

4. Think critically

PCWorld

To sum up the above tips: You can’t know everything. (At least, most of us can’t.) The next best skill is understanding who to rely on—and how to decide that. Malicious AI wins when you turn off your brain.

So, always ask yourself, does this sound right? Don’t let confidence sell you.

The above tips will get you started. But you can keep that momentum going by regularly cross-referencing what you read (that is, looking at multiple sources to double-check your AI helper’s work) and by learning who to ask for additional help. My goal is being able to answer a second question after that work: Why did someone create this source article or video?

When you know less about a topic, you have be smart about who you trust.

Autentifică-te pentru a adăuga comentarii

Alte posturi din acest grup

According to the latest rumors, Valve’s mythical Half-Life 3

If you use Microsoft’s Authenticator app on your mobile phone as a pa

Amazon’s kid-focused version of the Kindle Paperwhite is on sale righ

The new generation of Arm-powered laptops hasn’t been flying off the